Working in 3D to render stylized 2D line art — how crazy is that? Not too crazy and even pretty awesome. Stable version of Blender recently got a new tool for that, and there are some other interesting ongoing developments.

Science is funny like that: your research can go through multiple stages before it becomes available to end-users, if ever.

Two non-photorealistic rendering (NPR) technologies discussed in this article, Freestyle and Jot, are great examples of this fact of life: both projects were started in early 2000s, and both are getting their bulk of real users just now (anyone who’s been following a 135 pages long BA thread on Freestyle would readily object).

For the article I’m deliberately focusing on Blender-related technologies. But there’s more to NPR than that. “Further reading” section in the end is your friend there.

CAN I HAZ FRISTAYL?

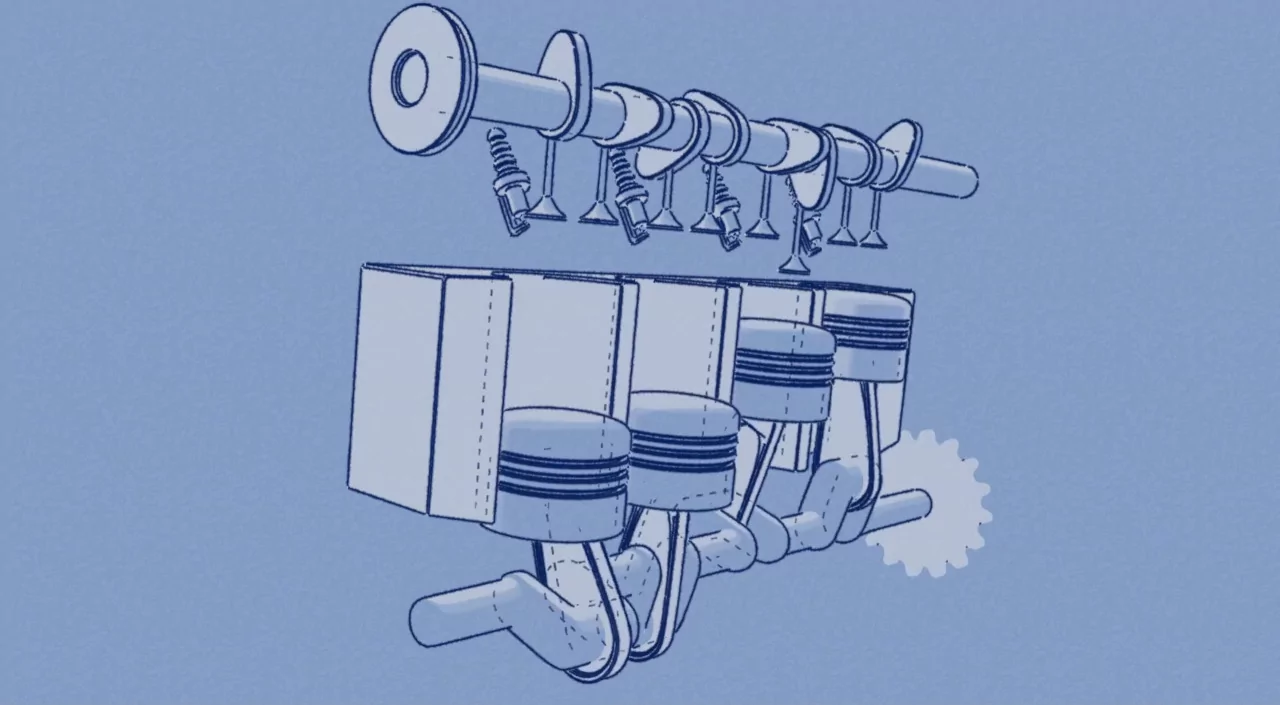

The idea behind Freestyle is to take a 3D scene as an input and render stylized line art image on top of it. The engine is highly configurable and allows to apply a variety of styles.

Naturally, Freestyle gets its use in production of animations. E.g. here is the opening of ”Garuda Riders” animated series:

Or “The Light At The End” short by Chris Burton:

And just for the heck of it, overweight boss rig animation by mclelun will definitely put a big bright smile on your face. I still have mine.

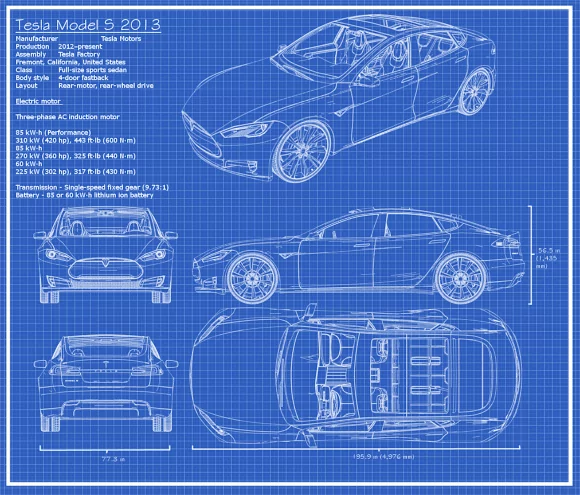

As if various animation styles weren’t quite sufficient, there’s more. How about blueprint-like renders?

Tesla Model S blueprint render by miika2

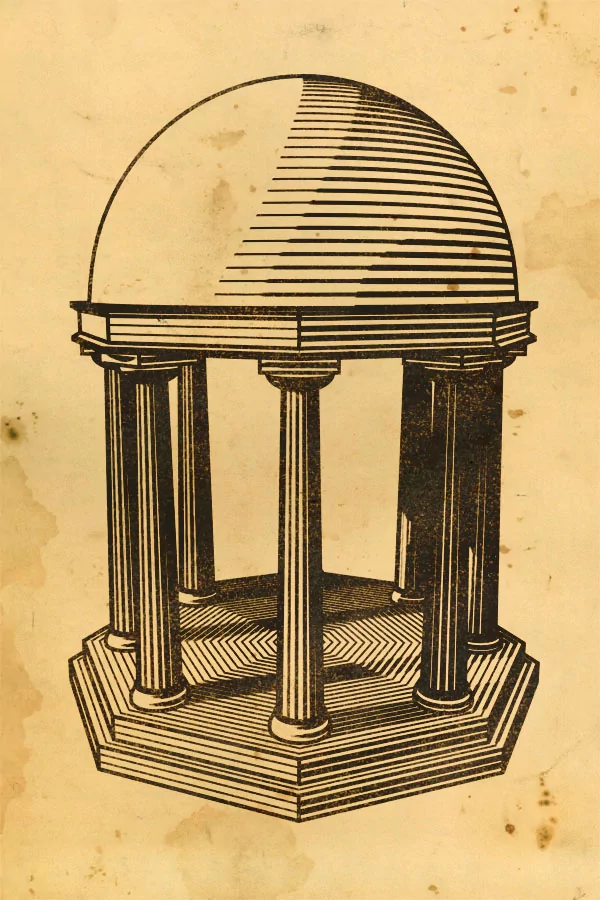

Or artistic hatching?

Temple xylography by Charblaze

Well, you get the picture.

Originally Freestyle was just a standalone application, where stylization was programmable. That is, you had to be an artist and a programmer. No big deal for some, but for others — the steepest learing curve ever designed.

The relevant papers and parts of software were written by Stéphane Grabli, Emmanuel Turquin, François Sillion, and the omnipresent Frédo Durand:

- Programmable Style for NPR Line Drawing (2004);

- Density Measure for Line-Drawing Simplification (2004).

In 2008, Maxime Curioni, a French student from Télécom ParisTech picked Freestyle integration as a Google Summer of Code project. The idea was to turn the programming of styles into a bunch of knobs and sliders for the artsy types.

The GSoC project was successfully completed within the defined scope. However the amount of work was way too large for a single project, and so the journey continued 4+ more years (our friends at Blender NPR put together a complete story of the undertaking and posted it here).

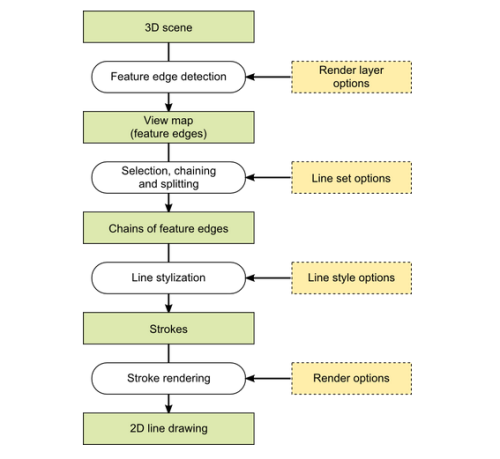

So how does it work? A precise, if slightly boring diagram of the Freestyle pipeline looks like this:

The original paper states:

We propose a new image creation model where all operations are controlled through user-defined procedures. A view map describing all relevant support lines in the drawing and their topological arrangement is first created from the 3D model; a number of style modules operate on this map, by procedurally selecting, chaining or splitting lines, before creating strokes and assigning drawing attributes.

The resulting drawing system permits flexible control of all elements of drawing style: first, different style modules can be applied to different types of lines in a view; second, the topology and geometry of strokes are entirely controlled from the programmable modules; and third, stroke attributes are assigned procedurally and can be correlated at will with various scene or view properties.

You probably want a hands-on tutorial at this point. Last week The Spastic Kangaroo posted an awesome video that will walk you through the entire settings. Highly recommended:

With Freestyle available in stock Blender for everyone to try it, have all NPR dreams come true? Not unless you count a few other interesting projects…

Jot resurrected

In early April this year Ragnar Brynjúlfsson caused quite a stir in the community, when he published an exporter from Blender to Jot system, thus extending the concept of NPR beyond Freestyle, low-poly, and various abstract art.

What’s Jot, and what’s so cool about it? A video is worth a thousand pictures, quite literally:

So the basic idea is that you can draw stylized animated line art on top of a 3D model live, and the system automates part of the job for you. The 2002 paper specifically mentions:

We focus on stroke-based rendering algorithms, with three main categories of strokes: (1) silhouette and crease lines that form the basis of simple line drawings; (2) decal strokes that suggest surface features, and (3) hatching strokes to convey lighting and tone.

In each case, we provide an interface for direct user control, and real-time rendering algorithms to support the required interactivity. The designer can apply strokes in each category with significant stylistic variation, and thus in combination achieve a broad range of effects.

Jot, created in 2002 by Robert D. Kalnins, Philip L. Davidson, and David M. Bourguignon, is the result of a chain of researches: from “Direct WYSIWYG painting and texturing on 3D shapes” (1990) to “Decoupling Strokes and High-Level Attributes for Interactive Traditional Drawing” (2001).

Your immediate download for Windows is right here. If you’re curious, the authors produced several papers of their own:

- WYSIWYG NPR: Drawing Strokes Directly on 3D Models (2002);

- Coherent Stylized Silhouettes (2003);

- WYSIWYG NPR: Interactive Stylization for Stroke-Based Rendering of 3D Animation (2004).

The researches also served as a starting point for further scientific work, such as:

Source code was eventually released under terms of GPLv3+ in late 2007 “in the hope that parts of it will be useful to 3D graphics developers”, which didn’t happen until just several weeks ago thanks to Ragnar.

Test Render with Blender + Jot by Jameson Ballard

Ragnar explains:

I saw the presentation of Jot shortly after it had been presented at Siggraph in 2002, and thought it looked great. Then nothing seemed to come of it. There was no commercial renderer that used the technology, and no open-source projects.

Then years later a co-worker of mine pointed me to a post on Blender Artist about it, and that the source code had been made available through Google Code. So it’s kind of been at the back of my head for a long time, waiting to be done something with… until last year when I got more into Blender and figured I would take a shot at writing an exporter for it.

Unfortunately Jot wasn’t left in a very good state. The build system in the upstream source code repository is rather arcane, and making the application work on Linux is impossible right now. It’s reported to work under WINE though.

Elliott Sales de Andrade made an attempt to fix it and ported Jot to CMake. He has a public repository on Github you could clone and further improve, should your fancies take you there.

What’s the state of the project then? Basically, Jot awaits its dedicated developer. Someone like Freestyle’s Tamito Kajiyama and Maxime Curioni.

Toon shader: what’s next?

There’s another way to get a cartoon look that somewhat went off radars with recent introduction of Freestyle to the stable version: the Toon shader in Blender Internal (for a quick introduction you can use an older tutorial at CG Cookie).

Example of using the Toon shader by Jefferson Davis

LightBWK recently posted a rather detailed proposal to create several new toon shaders in Blender Internal:

- Cel Toon, that would use Cel Shading, commonly found in comic books, games and some old animations;

- Soft Toon, a soft toon shading also commonly seen in games and old Disney animations;

- X-Toon, a toon shader from a 2006 research that allows to support view-dependent effects, such as levels-of-abstraction, aerial perspective, depth-of-field, backlighting, and specular highlights.

There’s an ongoing thread at BA about that, and another suggested approach is to code some of that in OSL for Cycles. As usual, programming contributions highly appreciated.

A conversation with Stéphane Grabli and Tamito Kajiyama

Is it possible that Blender will go beyond having a 3rd party exporter to Jot and get it as a native tool? And what’s the outlook for non-photorealistic technologies per se?

We asked that and a few other questions to Stéphane Grabli, developer of the original Freestyle, and Tamito Kajiyama, the person who ensured getting Freestyle into upstream Blender.

Stéphane, as far as I can tell, fairly often researchers move on and don’t follow the former area of interest much, as they get on with their jobs and their lives. But you even produced a Freestyle video a few years ago. How closely do you follow Freestyle/Blender progress these days?

Stéphane: Three years ago, as we were publishing an extended version of the original Freestyle paper for Transactions On Graphics, I wanted to demonstrate Freestyle through a small animation which would be shown at the Siggraph Fast Forward. This was a great opportunity to give a serious try to the integration that Maxime and Tamito had been working on for quite some time already.

It was a lot of fun to do this animation. Of course there were still bugs remaining (mostly carried over from the original code), but overall it worked really well. After that SIGGRAPH, despite my best intentions, I couldn’t make the time to keep on testing and contributing to the Freetsyle integration to Blender.

I kept an eye on Tamito’s blog and I’m on the bf-blender mailing list, so I knew about major milestones, but I’d say I was following Freestyle’s development in a purely passive way. Now that Freestyle is in the trunk, it makes it much easier to play with it and contribute, so I’m back to having a lot of great intentions :-)

As far as I can tell from your IMDB profile, at ILM you are more likely to be involved with research on photorealistic rendering now. How closely do you follow NPR-related academic works?

Stéphane: Yes, this is true, at ILM I’m generally working on photorealistic rendering. I still have a strong interest for non-photorealistic rendering though, and try to keep up-to-date with what’s happening in research.

What do you think are the most interesting recent academic projects?

Stéphane: I read NPR papers which seem particularly interesting, when I see them, but I’m not actively searching for them, so I’m possibly behind on some techs. Off the top of my head, I can think of a few “recent” papers which I found particularly interesting:

- Spatio-Temporal Analysis for Parameterizing Animated Lines by Buchholz et al (NPAR 2011)

- Active Strokes: Coherent Line Stylization for Animated 3D Models by Benard et al (NPAR 2012)

- OverCoat: An Implicit Canvas for 3D Painting by Schmid et al (SIGGRAPH 2011)

- Coherent Noise for Non-Photorealistic Rendering by Kass et al. (SIGGRAPH 2011)

- Partial Visibility for Stylized Lines by Cole et al (NPAR 2008)

I’m sure there are plenty more really good papers I missed. Generally, any project related to either (1) solving the problem of stylized lines temporal coherence, (2) improving the visibility computation for smooth silhouette lines or (3) improving the quality of lines obtained from a 3D model.

An experiment with Freestyle by defined

I also found out recently about the Node-based approach to line drawing in Blender which gives very nice results. I think it’s really interesting that 2D approaches like that one and 3D approaches like Freestyle have exact opposite qualities and drawbacks.

The 2D approaches have no problem of line visibility and less problem of line quality, but generally lack semantic knowledge about the lines they manipulate and have less precise stylization control. The 3D approaches are the exact opposite.

And what about practical applications of the researches?

Stéphane: As far as practical applications go, the main ones that come to my mind are the various uses of NPR by Disney: subtle stylization of photorealistic rendering as in “Bolt” for instance, the use of NPR to assist 2D animation as in “Paperman”, or their next generation Deep Canvas.

Frankly, I’m expecting that a lot more very interesting applications of NPR will come out of Blender now that Freestyle is part of the official release, whether it be for technical illustrations or artistic ones.

Tamito-san, now that Blender ships with Freestyle, what do you think is the next step for the project apart from (obviously) testing/bugfixing? Are there some new features and ideas you’d like to work on?

Tamito: The highest priority so far has been indeed the stability of the Freestyle rendering process, and many instability fixes and performance improvements have been made on the basis of the original stand-alone Freestyle program. This development direction remains the same even after the official Blender 2.67 release with Freestyle in it.

Apart from the work on general stability, I would like to work on additional Freestyle components as much as time permits. The first thing I have in mind is built-in support for SVG export. There is already an external software package named SVGWriter for exporting Freestyle line drawing in the SVG format.

It is noted that Freestyle for Blender comes with two user interaction modes, i.e., the Parameter Editor mode for artist-friendly graphical interface to line stylization parameters and the Python Scripting mode for full programmability of style modules in the Python scripting language.

Freestyle tests by mookie3d

The SVGWriter is written for the Python Scripting mode, and users need to manually modify the code to change line stylization parameters. The idea is to integrate this module into the Parameter Editor mode to make SVG export easy.

The SVGWriter module is also capable of generating SVG animation. Adding support for animation with HTML5 canvas should be straightforward. This component would provide a killer application of Freestyle for Blender.

I am also interested in working on feature line detection at face intersection. Two faces intersecting with each other creates a new edge, which is rather easy to compute especially when using a geometry-based computer graphics library, but adding this functionality to Freestyle is likely a challenge due to required refactoring of the existing code base.

Freestyle tests by procreaciones

Another long-awaited feature is multithreaded rendering. At the moment the Freestyle renderer is a sequential program, and only the final stage of stroke rendering is parallelized thanks to the Blender Internal renderer internally used for that purpose. Since parallel computing is part of my background, parallelizing the rest of the Freestyle rendering process will be a fun project.

A few more additional functionalities I am interested in a longer term include fine-grain Python scripting for line stylization and textured strokes.

Stéphane, what do you think are the interesting areas of further research in NPR?

Stéphane: I think I would first focus on perfecting rendering of lines from 3D. In particular:

- improving the quality of feature lines extracted from 3D models;

- finding a robust solution to silhouette visibility;

- implementing temporal coherence of line parameterization from frame to frame in a way that is practical for production.

There are already some very good papers tackling these problems (like the ones I cited above), but I think there is some work left before we have production-ready solutions.

I would then think about alternatives to stroke-based rendering for region filling (I’m thinking painterly rendering). I’ve done some work related to stroke-based rendering and their temporal coherence: this is a difficult problem which might be best avoided :-)

When I was younger I also had the dream of a user interface where the artist would draw a character and the system would automatically capture the 3D shape and the line style and make it possible to animate that character and render it with the exact input style. I feel we may not be that far from such a system anymore.

Are you familiar with Jot?

Stephane: I know Jot, yes. I have used it only a little bit and that was years ago. But I’ve read most of the publications that went into that system. I think it’s a great tool with many amazing techs that I hope will be progressively integrated to Freestyle.

Freestyle and Jot have some fundamental design differences, the main one being, in my opinion, that Freestyle is built as a programmable system. This makes it possible to decouple style and model, which is essential in a production environment. On the other hand Jot is WYSIWYG and as a result easier to experiment with and to get acquainted with.

A rendering test with Blender and Jot, by Matthieu Dupont de Dinechin

That being said, Tamito did an amazing job at designing an artist-friendly UI on top of Freestyle: artists can now design styles by tweaking UI parameters, without having to go through Python coding!

The amazing part is that Tamito managed to develop this UI without significant compromise on the flexibility afforded by the system. This remarkable work really makes Freestyle as accesible as Jot I think.

Tamito-san, do you think it’s possible to have both Freestyle and Jot in Blender?

Tamito: I think it is absolutely possible to integrate Jot into Blender in addition to Freestyle. It is noted that Freestyle is a programmable NPR rendering engine which implies a static parameterization of line stylization by means of programming constructs.

Jot looks much more dynamic and interactive than Freestyle. Maybe Jot fits better in the 3D View window so as to enable the dynamic nature of user interaction.

To me the most likely integration scenario is to have both Freestyle and Jot independently in Blender.

Further reading

If you are interested in non-photorealistic rendering in Blender and beyond, here’s some useful information in no particular order:

- Documentation on using Freestyle in Blender.

- A collection of Freestyle-flavored submissions for Blender 2.67 splash screen.

- User manual for Jot.

- The whole story behind creating the exporter from Blender to Jot + some technical details.

- BlenderNPR. An active blog on non-photorealistic rendering with Blender.

- How our proprietary friends do it with 3ds Max.

- NPAR 2013. This year International Symposium on Non-Photorealistic Animation and Rendering is co-located with SIGGRAPH 2013 and taking place on 19–21 July 2013 in Anaheim, California.

- Microsoft’s papers on Real-time hatching and Fine tone control in hardware hatching.

Patreon subscribers get early access to my posts. If you are feeling generous, you can also make a one-time donation on BuyMeACoffee.