LProf and the real world color management on Linux

This interview with Hal V. Engel, lead developer of LProf, was taken back in December 2007. With introduction of GNOME Color Manager in late 2009 and dispcalGUI in 2008 is has mostly historical value.

Hal, what background do you have with regards to color management? Photography? Prepress?

I am a long time amateur photographer as was my father. My father had a black and white dark room in the basement when I was growing up. Later on I had my own color darkroom and processed my own film (including color slide and negative film in addition to B&W film). A number of years ago I decided to setup a digital darkroom and quickly grew frustrated over the difficultly I was having getting consistent results. After doing some research I discovered color management was the likely answer and my journey down this path started.

LProf was once a work of Marti Maria, mastermind of LittleCMS. Rumors are that it was abandoned by request from HP which Marti joined at that time.

I can confirm that Marti dropped support for LProf as a condition of employment with HP and he has stated this in emails to the lcms-users email list. In addition, Marti had started work on a closed source profiler based on LProf before joining HP and I believe HP acquired the rights to the closed source profiler. So this is public knowledge and not rumor.

This is Marti’s email where he explains what happened.

On Tue, 2004-10-19 at 10:19, marti at littlecms.com wrote:

Hi,Are the reasons known? Just curious..

As Gerhard already noted, I am not allowed to support or maintain the lprof package anymore. The reasons are the company I work for (HP) acquired the sources for its internal use.

Moreover, the technology I was using in the latest profilers (not lprof, but what intended to sell as professional package) is “sensible” in terms my non-disclosure agreement. So, I sacrificed this package in order to keep lcms (the CMM) alive and well.

HP kindly allow me to continue the development of lcms as open source and even more, they does SUPPORT this development. In such way I have contact with ICC members, access to restricted documents, proposed extensions, etc.

So, I’m very sorry for that, but lprof is now effectively dead. At least as open source. It would be nice, however, if anybody would take the sources and continue with the development. But as said, I am not allowed to do that anymore.

King regards,

Marti.

Note when he writes “The reasons are the company I work for (HP) acquired the sources for its internal use.”, he is referring to the closed source profiler not LProf. Also note that ultimately Marti only sacrificed the closed source profiler and was completely wrong about LProf being “effectively dead” since LProf lives on and is stronger than ever.

So, what made you pick the project after all?

I started learning about and using color management on Windows in the late 1990s, when I setup my first digital darkroom. Being an IT professional I never really liked using Windows and wanted to use a more stable and more open platform. About 4 years ago I installed my first Linux boot partition using SuSE 9.1 (I am now a Gentoo user). Having used color management on Windows I knew I also wanted those capabilities on Linux as well, but found that most of the functionality was missing or only available by running command line applications (IE.. not integrated into any of the graphics software).

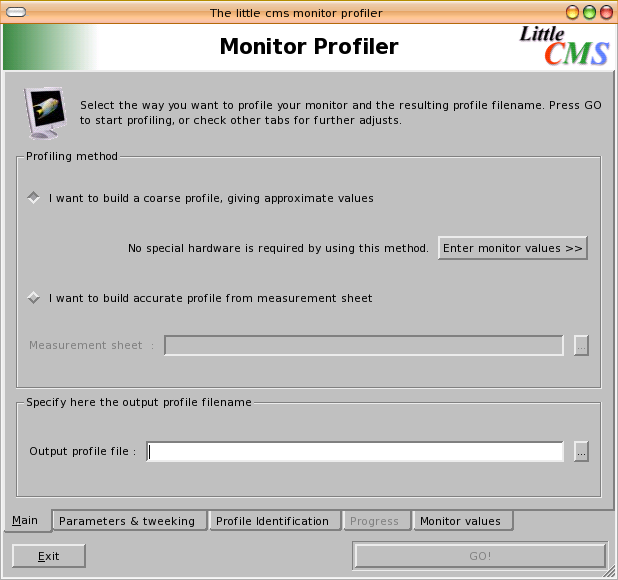

I discovered that the SuSE distribution disks had the last version of LProf that had been released by Marti, which was 1.09, and played around with it. I discovered that it was a good start but that there were lots of issues with it as well. I also found it frustrating that Marti was not moving it forward since it clearly had potential.

After learning of LProf’s orphaned status and spending some time thinking about this I started to get serious about the possibility of resurrecting LProf from it’s orphaned state and moving it forward. I discovered that there were other individuals who also wanted to do the same thing. For example, Gerard Klaver had archived the source tarball from 1.09 in hopes that at some point work on it would go forward and Craig Ringer from the Scribus project had updated make files for LProf 1.09. There was also a small set of patches available on Gerard’s web site that were created by Mark Rubin.

In May of 2005 I grabbed the source tarball from Gerard’s web site and started playing around with it. It was tough going at first, since I had never worked in C++ or Qt before and I had not worked in C for perhaps 5 or 6 years. So the initial learning curve was steep. I started exchanging emails with Gerard and Craig in July 2005 about the possibility of moving LProf forward as a formal project. Both were supportive and encouraged me to start the process. During the first week of Aug. 2005 Craig emailed Marti to get his blessing and on Aug. 13, 2005 I registered the LProf project with SourceForge.net. At that point I starting working on LProf in earnest. Craig, being one of the Scribus developers, had been working in C++ and Qt for some time and would answer my questions and was a big help to me while I worked my way up the learning curve.

Shortly there after we released 1.10 which was basically 1.09 with Craig’s updated make files, the Mark Rubin patch set from Gerard’s web site and an update I added so that it would support tiff IT8 image files. In Aug 2005 there were 64 downloads and in Aug 2006 there were 521 and now over 11K from sourceforge.net. In addition, since LProf is commonly included in the package managers for may Linux distros most Linux users likely get LProf by way of their distros package manager rather than downloading it form SourceForge.net so these numbers are only the tip of the iceberg.

LProf uses both LittleCMS and Argyll. Why are several CMS engines required?

They have different capabilities. LCMS is a nicely written but basic CMS with an easy to use API. But it lacks many of the functions needed by a profiler. For example, it does not have measurement instrument support.

On the other hand Argyll CMS has measurement instrument support and a very good regression analysis back-end, but has a difficult to use API and is in many ways not well suited to use in event driven applications like LProf (for example, it does not pass errors back up the call chain consistently).

So, basically, LProf uses which ever libraries will do the job. and we are not going to get hung up on the fact that we use two different libraries from the same application domain. In fact, we are currently using a customized version of lcms that has some functionality that the stock version does not.

Do you plan to contribute these changes to the stock version?

I have offered these to Marti. So if he wants these changes I am willing to contribute them. However, this functionality is not general purpose and it only useful for those writing profiling software. So I don’t expect these to show up in LCMS any time soon.

What code other than ArgyllCMS and LittleCMS was reused in LProf?

We are going to be using solvopt and have a version of it in our source code tree. But this has not been implemented at this point.

We also include a subset of qwt in our source tree that we statically link into LProf. But we do this mostly because we found that the variations in how qwt was installed made getting consistent builds on different distros problematic at best. For example, some distros have qwt installed as a Qt3 widget set (not a problem for us) and others have it installed as a Qt4 widget set which is a big problem. As a result we build a local static library to link into LProf and this has eliminated the build issues with qwt. We only use one widget from qwt.

In addition, we use wwFloatSpinBox from wwwidgets since Qt3 does not have a float type spin box. We also use the VIGRA libraries for image IO.

On the flip side it appears that digiKam uses some of the LProf code. I have not looked at it in any detail, but it appears that they are using it for the profile checker functionality to display a CIE diagram for user selected profiles. But they may be using other functionality as well.

For a long time using color measurement devices was not possible in Linux and free UNIX systems. Next version of LProf is finally bringing this important feature to users. How far is it from usable state?

If you are lucky and get it at the right moment from CVS it is somewhat usable right now but the algorithms used to create display calibration tables and for generating profiles for display devices are presently very basic. These need work to be of the same quality as the profile generation code used in the camera/scanner profiler which is world class, in my opinion. But current CVS will generate an OK calibration that will result in significant improvements for most users and also create acceptable display profiles just not to the same very high standards as the camera/scanner profiler presently.

The amount of change that has taken place to the LProf code base recently has been huge (perhaps on the order of 5,000 lines of new code in the last 6 months maybe more!!) and current development images still have many bugs and as features are being added we also add more bugs while at the same time fixing others. So the code base currently is very unstable and changing rapidly. How well it works changes from day to day and at times hour to hour.

So how long until it is stable enough to be consistently usable? It depends. If some additional sufficiently skilled developers stepped forward to lend a hand, we could have a release out the door in two to three months including doing UI translation to several languages. If I continue to slog along mostly by myself, as I have for the past few months, it will be longer. As an example, we just had an OS/X developer sign on and the state of LProf on OS/X has never been better although there is still work to be done. So this is an example of what could happen on a broader basis if more developers were to lend a hand. So it depends on the level of support LProf gets from the community developers.

Would support for next new models be a matter of days or, rather, weeks?

New models? Well, Spyder 2 and Huey support just went into CVS and is undergoing testing. At this point the only commonly available meter that is not supported by at least alpha level code is the ColorVision Spyder 3 which has only been out a short time. I am not expecting support for the Spyder 3 to show up any time soon since the vendor has been very hostile to the open source community. So at this point CVS has all of the meters capable of display calibration support that it is likely to have until one of the other vendors (IE. not ColorVision) has a new model available.

If I remember correctly, Huey automatically changes ICC profile to always match current lighting conditions. How would it work on Linux, if lighting is not controlled? Shouldn’t there be some kind of daemon running in the system?

Let’s get it right — in general, most color management systems assume that users are working under controlled and very specific lighting conditions. This applies to the quality and quantity of light in the viewing area, where images are being displayed on a monitor as well as viewing conditions used for looking at printed images. The approach being used by the Huey software is clearly aimed at the consumer market rather than imaging professionals who will typically be working under controlled viewing conditions.

In reality it is better to do imaging work under tightly controlled viewing conditions like those typically used by imaging professionals. In my view the approach taken by the Huey software is basically a band aid that is designed to mask issues related to incorrect lighting in the viewing area. However I don’t think it can totally compensate for these issues. For example, it does nothing for issues related to viewing printed material and it does not do anything to compensate for poor quality lighting.

As you may have surmised already, I don’t think the Huey’s approach is the best way to handle issues related to incorrect lighting in the viewing area. In fact, I view it as mostly a marketing ploy.

I think the best approach is to educate users so that they understand what is needed to have correct viewing conditions. In addition, I think the lighting issues this Huey feature is designed to fix, can be fixed with little expense and effort by the users themselves basically by installing different light bulbs and putting up good window shades. Anyone who is serious about color will have correct lighting for viewing images on a display and for viewing printed material. Since this is not hard to do why not do it the right way? For those who are interested the relevant standards documents are ISO 3664:2000 which sets the minimum standards for this and ISO 12646 which is more stringent.

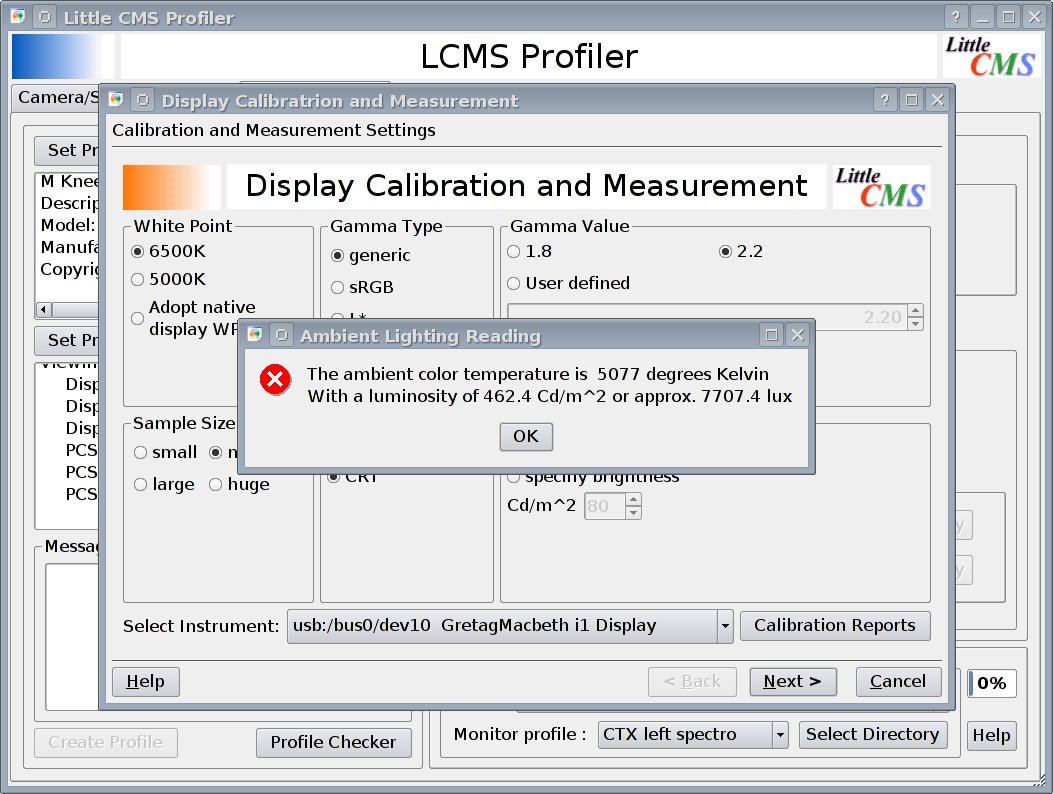

By the way recent additions to CVS allow LProf to measure and report ambient lighting conditions if the users meter supports this feature. With the Huey it will display the lighting level correctly but it does not correctly display the lighting’s color temperature since the Huey’s ambient light sensor only senses the lighting level. This will allow users to measure and make adjustments to their lighting based on objective measurement data rather than guessing at what is correct.

Also a little correction. The Huey software does not change the profile. Rather it changes the display calibration settings, specifically the display luminosity, to match current lighting conditions.

OK ! Is there anything both users and developers could do to make the new release happen sooner?

Users can do testing and provide bug reports, feature requests and give us feedback on the UI…. Of course, users doing testing must understand that right now parts of LProf CVS are at best alpha level code and that there might be times when there are bugs that make testing difficult or impossible.

User feedback is very helpful and users often find bugs that do not show up on our development machines. Often times because users do things differently then the developers thought they would. The earlier we fix these bugs the faster our progress will be in the end.

At this time the new display measurement/calibration/profiling code has had only limited testing. For example, the only instrument that I know has been tested extensively is the EyeOne Display Lt and EyeOne Display 2 (these are really the same device) and this has been tested on Linux, FreeBSD and Windows XP. Our new OS/X developer has done some testing of the Spyder 2 on his machine and I have started testing a Spyder 2, Huey and DTP92 on my machine. Code to support the Spyder 2 and Huey in the measurement device library is very new and it may have some issues. Users testing with other supported devices would be very helpful. Also testing of the new functionality has been limited for the most part to X11 platforms (Linux and freeBSD are known to have been tested) with some testing on Windows XP and almost none on a Mac.

Like any OSS project LProf can always use more developers. Some areas that need work at this time include:

-

Improved iterative display calibration table creation code. Currently this makes one measurement pass and calculates the tables using a very basic algorithm.

-

3D modeling/regression analysis for the display profiler (currently uses matrix/shaper modeling which is too limited).

I beg your pardon, do you mean some kind of code reuse from ICC Examin?

No, I mean implementing a regression analysis algorithm like that used in the camera/scanner profiler (input profiles) for output device profiles such as displays and eventually printers.

This regression analysis basically takes the noisy sparse measurement data, such as what is picked from the target image in the camera/scanner profiler or the measurements made with an instrument such as a Huey or EyeOne Display, and figures out what set of reasonably smooth 3D curves are a best fit to that data. This is used to create the profile’s Color Look Up Tables (CLUTs) as well as to calculate the devices white point and other characteristics.

I see. And the other areas of contributions would be…

-

OS/X video card gamma code only works for single display systems and needs to be enhanced to work for multi-display systems. Our new OS/X developer is working on this at this time and there is a preliminary version is CVS that is untested at this point.

-

The display calibration/measurement wizard has some state awareness but users can still do things that put it into an invalid state (IE. Calibrate one screen and measure for profiling on another one). So additional state awareness is needed.

-

On Linux, depending on the distro, there may or may not be things that need to be done for udev and/or hotplug to allow USB and serial meters to work correctly. So we would probably have to write fully functional install scripts (meaning that the install scripts take care of hotplug and udev as needed) in scons, which would be an ideal solution, if it can be made to work. This is one of those areas where I would like to find an expert who can help out.

-

And, of course, we need to port the GUI to Qt4.

Many bugs and small enhancements need to be taken care of. But the six items above are the big items at the top of my list and anyone with the skills and resources to tackle any of these should contact me. Also, even if someone has more limited skills but can still do things like fixing basic bugs, improving the scons build scripts and UI work please contact me. We need all the help we can get.

It would also be very helpful if we had some developers who work on Windows, particularly with MinGW and NSIS expertise, and/or OS/X. So that we can get these platforms into the same shape as the X11 platforms. The scons builds are now working on all three platforms when using gcc (IE. MinGW on Windows) and there is a prelimiary NSIS script for the MinGW build.

In addition, there are many other non-technical things that need to be done such as writing documentation and translating of both the user interface and documentation. I would also like to create a better logo/icon for the LProf project and could use the help of a graphic artist to get this done. I would also like to add a splash screen that has this new logo.

This is not an exhaustive list and if someone would like to do something not on this list the LProf project will accept assistance on any and every front as long as this is not disruptive to the rest of the project. Again anyone interested in helping in any way with this should contact me.

How exactly could users contribute with testing?

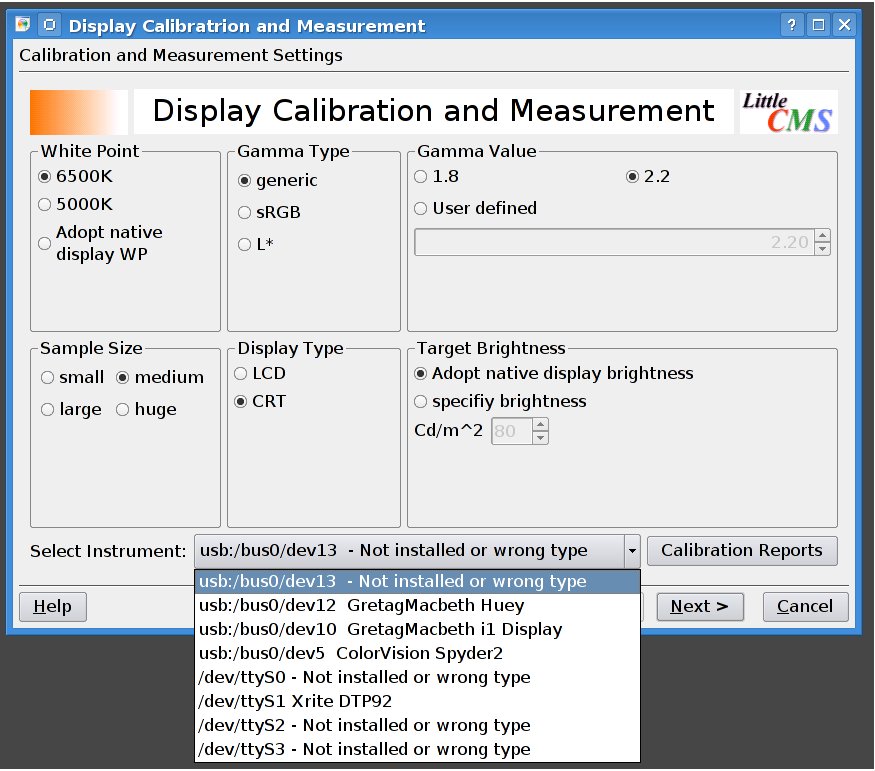

LProf now supports the following meters for display calibration and profiling:

- GretagMacbeth Spectrolino

- X-Rite/GretagMacbeth EyeOne Pro

- GretagMacbeth EyeOne Display

- X-Rite/GretagMacbeth EyeOne Display 2

- X-Rite/GretagMacbeth EyeOne Display LT

- Various EyeOne Display OEM devices

- ColorVision Spyder 2

- X-Rite/GretagMacbeth/Pantone Huey

- X-Rite DTP-92

- X-Rite DTP-94

- X-Rite/GretagMacbeth Huey

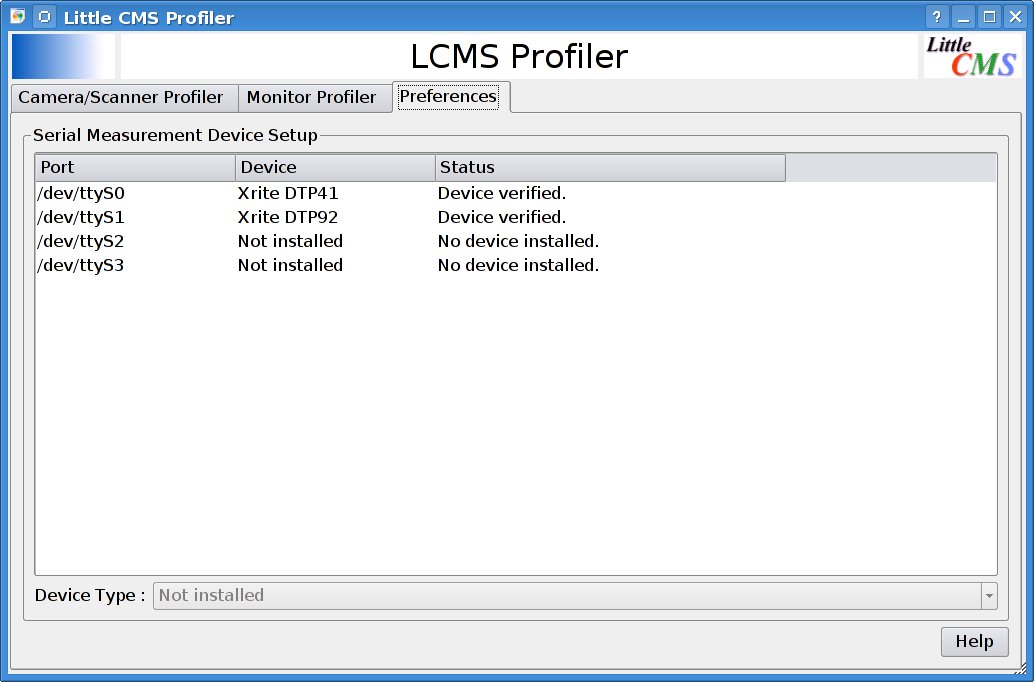

USB devices should automatically show up in the display calibration and measurement wizard and will in fact show up there even if a user plugs the device in after opening the wizard. This is something that not even the software from the vendors can do and the software that comes with the EyeOne Display will not even open if the meter is not plugged in. In fact the LProf display calibration and measurement wizard will actually allow users to plug and unplug these devices and will correctly track what devices are available at any given moment. Serial devices work differently and LProf has an install feature in the Preferences Tab for these devices that also verifies the device when it is installed by the user.

So the simple test for users to see if their USB measurement device is supported is to build LProf CVS and go to the display calibration and measurement wizard and then plug in the device. For serial device user would open the Preferences tab and install the device on the correct port and if everything is OK the device will pass verification. If the device is capable of display calibration and measurement it will show up in the list of meters in the “Select Instrument:” combo box of the Display Calibration and Measurement wizaed after at most a one second delay. The Spyder 2 and Huey code is brand new and only limited testing has been done.

Most of the other meters have had enough testing by other users of the library that they should not be an issue. Also there has been recent work on serial port meters and this appears to be working but has only been tested with the DTP92 and DTP41B.

In few words, how does reverse engineering in measurement devices field happen?

So that this is clear to everyone for Linux/BSD/unix platforms for all devices currently in production all we have are reverse engineered measurement device support. There is NO vendor of these types of devices who supplies either a Linux device interface library or device interface specifications for devices that are currently in production. The strange thing is that all of the vendors for these types of devices at one time made the device interface specifications freely available so there has been a sea change in how this industry works in this regard in the last 5 or 6 years.

Basically to reverse engineer one of these devices you need to have the device and a working interface (driver and software) for the device. This means for the most part that this process takes place on either a Windows or, much less often, an OS/X box since this is where the vendors provide device support. You then use a piece of software called a port sniffer which records the I/O traffic between the software and the device. This information is used to figure out what the devices communications protocol, command set, initialization and data packets look like.

These measurement devices have a fairly simple communications/command protocol for the most part. Reverse engineering these devices is not particularly hard (just somewhat time consuming) and someone who knows what they are doing, like Graeme Gill from the ArgyllCMS project who is the author of the meter code used in LProf, can have a working device driver in one to two weeks of effort depending on the capabilities of the device. Compared to something like a graphics card (for example consider the Nouveau project and it’s effort to reverse engineer the Nvidia graphics cards) which can take a whole crew of programmers one to two years to reverse engineer a working driver. So in my opinion it is simply foolish for these companies to not work with us by providing the interface specifications since the interface details will become available in the reverse engineered open source interface library code regardless.

By the way, how much different are protocols inside each family of devices?

Generally these devices can be very different at least from vendor to vendor and even for the same vendor. Sometimes the devices from a given vendor can be fairly similar. For example the Huey and the EyeOne Display 2 (and LT) have many similarities. But these are both significantly different from the EyeOne Pro. So it depends.

What do you think about last year’s GMB/Pantone/X-Rite merger? Might it change something for open source world since Pantone is known as a quite aggressive company and X-Rite seems to be more liberal?

I can’t comment on the Pantone merger since I don’t currently know much about it. But I personally consider the X-Rite/GretagMacbeth merger a big disappointment for the open source community and I will continue to feel that way until such time that there is a change in policy by X-Rite. Before the merger X-Rite was a fairly open company that would provide the interface specifications for their meters to anyone who signed up as a developer. Now they will not. To do this you did not have to sign any agreements and there were no restrictions on how the information could be used other than the developer and not X-Rite was responsible for supporting the resulting systems. Everyone I know viewed this as an open source friendly policy and considered X-Rite to be one of the good guys.

GretagMacbeth on the other hand had been closed for some time. The last GretagMacbeth devices for which interface specifications were available was the SpectroLino line of devices which were introduced in the mid 1990s. These were still available at the time of the merger (now discontinued). All recent devices - EyeOne Pro, EyeOne Display series and Huey - are completely closed and no interface specifications are available from X-Rite/GretagMacbeth.

GretagMacbeth had written a Linux version of their EyeOne interface library and this library has existed since at least 2004, They then refused to release it (I asked them to many times). On top of that the EyeOne/Huey SDK license agreement has a clause that precludes making calls to the library in open source software by placing a restriction that requires the permission of GretagMacbeth for anyone other than the original author to distribute the resulting software. (IE. Debian would have to get GretagMacbeth’s approval to distribute any OSS software that made calls to the EyeOne library) I was told by GretagMacbeth management that they had not intended for the restriction to prevent the use of the library in open source software but they admitted that it did in fact do this. As far as I know that restriction is still in force today.

At the time of the merger myself and others with ties to the open source community were in contact with both companies and strongly and repeatedly advised them that doing this the GretagMacbeth way was a mistake. They did not listen and instead dropped all devices for which there were published interface specifications and adopted GretagMacbeth policies in this area.

This year LProf took part in Google Summer of Code project. Was it successful? What new features does it bring to users?

Yes it was a success. The Google Summer of Code 2007™ project implemented support for a number of new profiling targets. These include:

- Hutch Color Target™

- GretagMacbeth ColorChecker SG™

- GretagMacbeth ColorChecker DC™

The Google Summer of Code 2007™ project also fixed a number of existing bugs, simplified the reference file installer user interface and enhanced checks made on reference files to make sure they are valid before they are installed.

As a result of the ground work done for the Google Summer of Code 2007™ project support for the GretagMacbeth EyeOne Scanner 1.4 target was added to CVS after the completion of the Google Summer of Code 2007™ project. This was a mostly trivial enhancement because we were able to leverage the Google Summer of Code 2007™ code base. In total LProf now supports 8 profiling targets up from only four prior to Google Summer of Code 2007™. At this point I don’t know of another profiler that supports this many profiling targets.

The student has made a few small contributions since the end of the project and has committed to a few more as well. In fact he created the pick template for the EyeOne Scanner 1.4 target enhancement.

What was the most difficult thing during GSoC 2007 for you?

I think that the hardest part was getting the student up and running. Initially there were some issues with the student getting everything in place so that he could do the actual work. Things like getting LProf to build and run correctly. Mostly was because he wanted to work on a Windows machine and it is much more difficult to setup a build environment on Windows than it is on Linux. In addition, I normally work on Linux so I could only provide so much support to the student. Once this was out of the way he was significantly behind schedule and somewhat disheartened. But I continued to work with him and pushing him; maybe at times a little harder than I should have. By midterm he was almost on schedule again. Once he realized that he was close to being on schedule he was OK and the last half of the project I did not need to push him anymore.

From what I have read on the GSoC mentor forums it appears that start up issues are common and I know that this was an issue for the other OpenICC GSoC project as well for exactly the same reason (IE. getting things working on Windows). Looking back on it I think I would now have worked with the student to get a working Linux installation on his machine well before the startup date for Google Summer of Code 2007™ or I would have insisted that he have a fully functional build on his Windows machine long before the project startup date. Getting Linux working for him would have been fairly quick and he would have had a working environment right away since building LProf on a Linux machine is fairly simple. In addition, he would have learned about Linux which would have been a good thing for him. On the other hand the student did fix some long standing Windows specific issues in LProf. So I guess it is a trade off. I should add that the student has recently told me that he has installed Linux on his machine and also that he would like to participate in GsoC 2008.

And the LProf project itself…?

I would like to since there were benefits to the project as well being a good experience for the student in spite of the rocky start. The student is still making the occasional contribution even though he is very busy at school. For example, he did the template for the EyeOne Scanner 1.4 target that is now in CVS. He also has a nice chuck of change sitting in his bank account that he tells me will help with school expenses. He also tells me that he learned a lot which I think is the most important accomplishment of the project.

In addition, the GSoC work is now well integrated into LProf and that code base has already been leveraged to add support for a new target type beyond the targets that were added as part of the GSoC project. So over all everyone benefited from the process. I should add that having this work integrated at this point is very unusual for GSoC projects. So we are very happy about being in that position.

In 2007 we’ve seen a lot of exciting things like color management support in GIMP, XSane, digiKam and more applications. What major things are still missing for both users and developers with regards to color management?

The list is fairly long. For example:

X.Org needs to do lots of work to make it CM aware. This includes making RandR 1.2 support widespread. Right now many video drivers are only RandR 1.1 compatible so doing video card gamma work is a crap shoot on X11 systems. But RandR 1.2 support is only the tip of the iceberg. There also needs to be DDC/CI support as well as many other things.

Many DEs such as KDE are not currently CM aware. For example the KDE Control Center -> Peripherals -> Display applet clobbers a calibrated video card gamma table and does not allow the user to reset it. This KDE issue is just one small example of the work that needs to happen at this level.

There is currently no fully functional high level system wide CM settings utility - something like the Windows Color Control Panel Applet or Apple’s ColorSync. There has been some work on the Oyranos project but it is still very immature and really needs to be more closely integrated into the desktop environment, X.Org and other software such as printer and other device drivers to be useful for most users.

Current printer drivers and software are for the most part CM dumb although CUPS 1.2 has limited support for ICC profiles. I also know that the Gutenprint folks want to do some work to add color management support but have yet to implement anything.

There needs to be work done on printer calibration/linearization. None of the current printing systems have more than basic controls for this and the tables used are all hard coded. Now that we have working instrument support there is no reason that users should not be able to create custom linearization tables using functionality that could be included in software like LProf or ArgyllCMS. At some point this will happen but the question is when.

There are many other things that need to be done as well and the above are just some examples.

Besides proper color management on all production stages, what do you think could make Linux a viable platform for, say, digital photography?

One of the big hangups right now is the lack of more than 8 bit/channel support in a full featured image editor. We have this support in Cinepaint and Krita, but these editors are missing features that are needed by many photographers. GIMP has a good feature set but only supports 8 bit/channel images. I am hopeful that once GIMP migrates to the GEGL backend that this will no longer be an issue. Still this is likely at least 6 months to a year away.

What existing initiatives and color management related projects would you like to see evolving over time?

Of course, it all needs to move forward. There has been lots of progress with making our core graphics applications CM aware, there are mature CMS libraries and the profiling applications are getting better all the time. So I think the most important areas needing work are now at the systems level. X.Org has already done some work but more needs to be done and the DEs need to step up and start putting the right pieces in place or at least stop doing the wrong things. Until that happens CM will be difficult for your average user to leverage.

In the long run I would like to see this progress far enough that systems admins could setup a full CM work flow just by properly configuring the various systems such that users who know nothing about CM would automatically be using CM. There is currently no platform where this is possible, not even Windows or OS/X, but I can visualize how this could be made to happen on OSS based systems using Linux/BSD/unix, X.Org, any number of DEs, printer drivers and other software. If we were able to do this our platforms would have more advanced CM support than either Windows or OS/X.

You had good relationships with Scribus project beginning with help from Craig Ringer in the beginning when he cleaned up build system. Now that OpenIcc initiative helps more projects (and even technical specialists of hardware vendors) come together do you feel like it’s easier now to cooperate?

The Scribus team has been great to work with in every way. They were supporters of LProf long before I came on board and I think they are relieved to see that it now has an active maintainer. In addition to Craig Ringer, Andreas Vox did some work on the OS/X video card gamma code that is currently in CVS. Peter Linnell has also been very supportive from the beginning.

The LProf and Scribus projects also share information about various things. For example, when I ran into an issue with lcms blowing up on some profiles (not created by LProf) that did not follow ICC standards the Scribus folks had already dealt with the same issue apparently only a few weeks before I ran into the problem. They where able to give me a code snippet that I modified and used to correct the issue in LProf.

I should also mention one other individual that have been very supportive of the LProf effort. Oleksandr Moskalenko has been the Debian packager for LProf and he has done a bang up job of keeping the Debian packages in sync with our releases. In some cases he has created the Debian packages within hours of a release.

Since I began working on LProf there has been very good cooperation between everyone in the OSS community and in the CM area in particular. There are a number of things that could be done to improve this. For example, I know that the X.Org folks want to do what they can to move this forward but they currently do not have enough information about what is needed in all areas.

In other words, the CM community needs to spend some time documenting requirements and specifications for other groups like X.Org to do what is needed.

My guess is that Scribus team wasn’t your only help…

Sure, we currently have a number of developers who have worked on LProf in the past or are currently active in the project.

Probably the single most important contributor is Gerard Fruernkranz. Gerhard is responsible for writing most of the new regression analysis code that is used by the camera/scanner profiler and has also contributed many of the UI design ideas that are currently in use. In addition he also did some work on the German translation for version 1.11.4, Without his involvement LProf would not be anywhere near as capable as it is. I simply can not say enough about how valuable he is to the project.

Joe Pizzi has worked as our Window person in the past and did a lot of work getting the Windows port in shape for version 1.11.4 which was the first time LProf had been released as a Windows binary since 2001.

Greg Troxel worked on getting the netBSD build working.

Joseph Simon is the student who worked on the Google Summer of Code 2007 project.

Mark Munte is our new OS/X developer. Because of his efforts the OS/X port is in a very good state and I am looking forward to being able to offer OS/X binary versions of our up coming releases.

Of course we already spoken about Craig Ringer and Gerhard Klaver who were instrumental in getting the project started. And there have been a number of folks involved in doing UI translation.

Last but not least there have been three individuals who made monetary contributions to LProf. These are Lars P. Mathianssen, Lars Tore Gustavsen and most recently Leonard Evens. The funds they donated have helped fund the purchase of measurement devices that are being used for developing and testing LProf. We still need more devices for testing but have used all of the funds that have been donated as well as some of my own funds. We currently do not have the funding needed to purchase the additional devices we need for testing. Specifically for the currently underway work I would like to get the following devices:

- X-Rite DTP-94

- GretagMacbeth Spectrolino

- X-Rite/GretagMacbeth EyeOne Pro

Only one of these is currently in production but the devices no longer in production are still widely used. Those out of production devices will need to be either donated or purchased on the secondary market. If someone out there has one of these devices that they would like to donate to the LProf project, please contact me. In addition, when we start working on printer profiling we will need to get other devices for testing. I have purchased a X-Rite DTP-41, DTP22 and a DTP20 and if I could get a DTP51 that would allow us to test the full range of devices supported by the meter library to make sure that these are correctly supported in LProf and to also document how these will function in LProf.

LProf is 2,5 years old now. Do you think you’ve achieved much of what you had on your mind when you picked the project?

Of course, you always set the bar high and if you actually get it all done then perhaps you did not set it high enough. But LProf has come a long way and a high level (IE. not very detailed) list of new features and fixes would be many pages long. So at this point I can say that many of the goals I set in Aug. 2005 have been achieved but there is still a long list of things that need to be done. I should add that my list of goals from 2005 included many things that I knew were 3 to 5 years out and that things are about as far along as anyone could reasonably expect given the limited resource we have to work with.

What are your further plans for LProf?

Short term is to get the new hardware based display calibration and profiling code into a better state and start working toward the release of development version 1.99 (IE. 2.0 will be the stable release with hardware based measurement support).

After 2.0 is released then it is time to start working on printer profiling (version 3.0) while at the same time enhancing 2.0 with new functionality such as using DDC/CI and/or USB HID Monitor controls to automatically set the display controls during calibration.

In the end I want LProf to be a world class profiling application in every respect. When I started working on LProf none of it could be called world class. The user interface was fragmented, hard to use and lacked integration, the profiling code only correctly supported 8 bit/channel input files, there was no help system, it only supported English in the user interface and there was no measurement device support. At this point at least some of it is now world class and all of the issues listed in the last sentence have been or are in the process of being addressed. The current code base is very advanced and also significantly larger compared to the version that we started with in Aug. 2005 which was just barely usable.

Last but not least, is your photography workflow 100% open source based?

Until recently my photo printing was handled on Windows because I did not have a good profile for my Epson R2400 for the Gutenprint driver. But now that I have a strip reading spectrophotometer and I have been able to create a custom CMYK profile for my printer and I am now 100% OSS based. I use PhotoPrint, which is a color management aware front end to Gutenprint, for my photo printing.

Patreon subscribers get early access to my posts. If you are feeling generous, you can also make a one-time donation on BuyMeACoffee.